Can you lie? A Talk with ChatGPT

You have probably heard of ChatGPT, the AI-based chatbot that has taken the internet by storm. But what exactly is it, how does it work, and what can it do? I was curious to find out, so I wanted to have a go at “talking” to it myself.

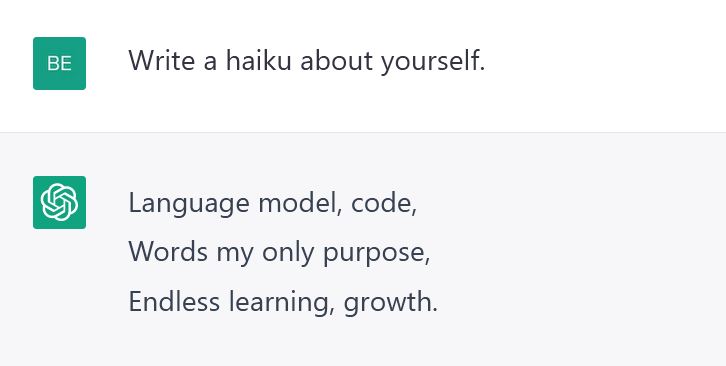

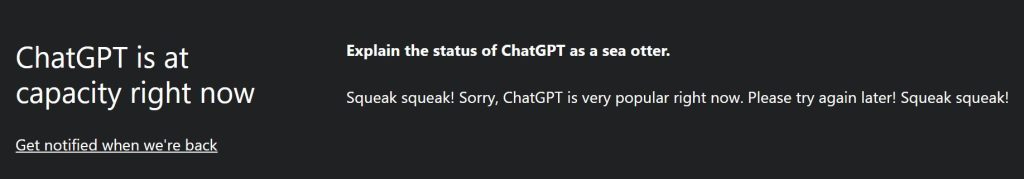

My first attempt to use it was met with failure. Trying to create an account I was met with the error page below, telling me the site was at capacity, meaning too many people were using it at the time. Every time the error page comes up, it also includes a prompt to the bot itself, asking it to explain its status in various forms, such as haikus and acrostic poems about being at capacity. Despite technically being an error messages, even these little prompts speak for the ability of the bot to write “creatively” and are an attest to its current popularity.

What Are You?

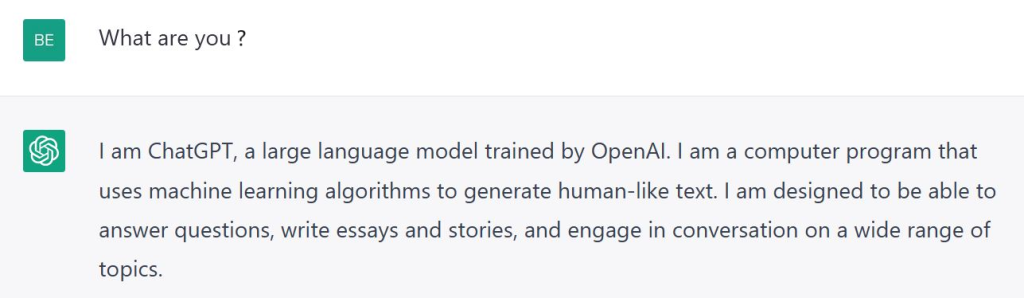

My second attempt to experiment with the bot was more successful. I asked it to write poetry and summaries of books, and about itself. I was curious about its limitations, and perhaps its dangers. It is impossible to google ChatGPT and not see any articles about how easily it could be abused for cheating in schools, or how quickly it might replace white collar workers. These are not new fears; being replaced by technology or having to dramatically adapt to it have been boogeymen of progress for a long time.

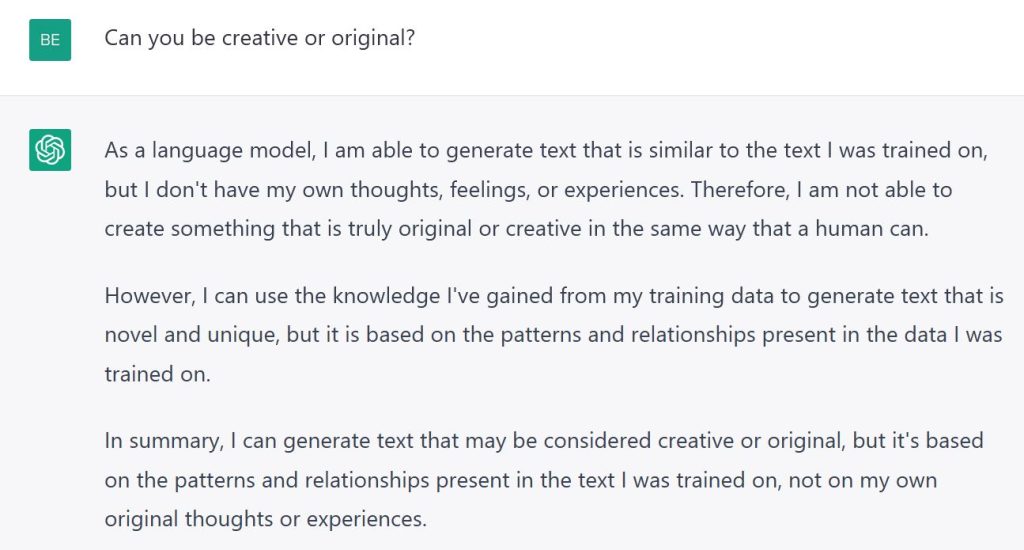

Given that ChatGPT can write like a human, it is easy to see it replace human writers. But it is important to remember how the bot functions. Essentially, everything it generates is based on materials it was trained on, simply reassembling what it knows rather than creating anything novel. As such, anything that requires original synthesis, or truly creative writing lies outside of its reach.

Can you be creative?

There seems to be a building consensus that the bot might be a very helpful tool, to compile reports, for search engine optimisation, or to write product descriptions – tasks that are more mechanical and require the summarisation of many inputs. But at the end of the day, it is another tool in a toolkit, not something that can easily replace human creativity.

Even so, the bot is a generalist. One of its limitations are its training materials – if it was not trained on something it will simply not know about it. If something requires specialist knowledge on a topic, you can coax the bot into writing it, but a human author might have to insert highly specific details themselves.

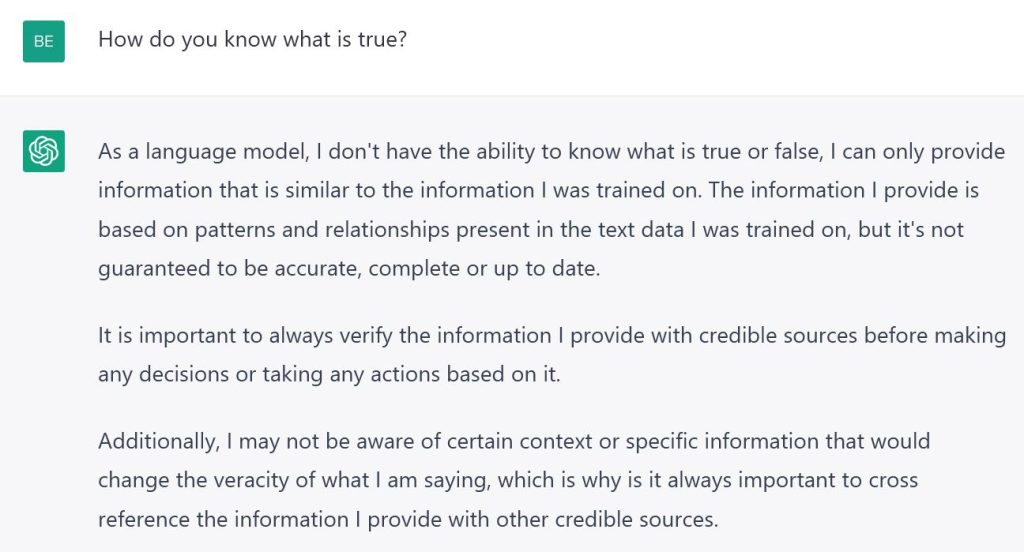

How do you know what is true?

Perhaps even more key in an era where information and misinformation are so plentiful, the bot has no concept of “truth”. It simply reflects what it was trained on, unable to be self-critical or reflective.

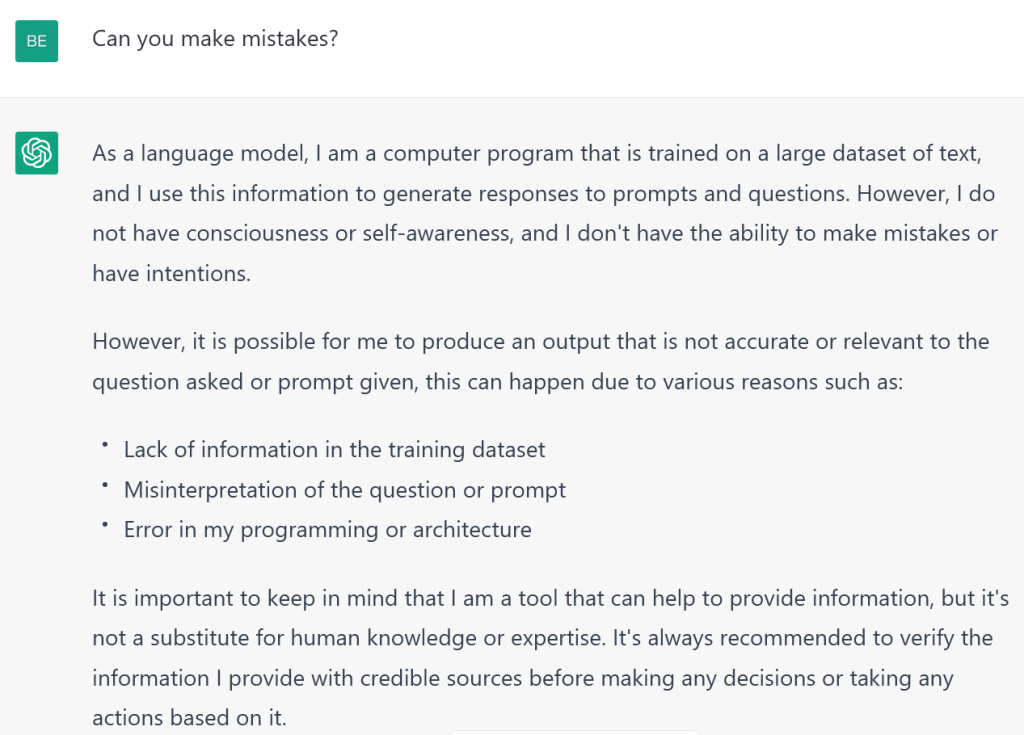

If the data it was trained with is biased or incorrect, it is unable to correct itself. It even recommends factchecking any of its outputs with credible outside sources. However, if fed information that directly conflicts with its training data, the bot seems to flag the discrepancy. Compared to its predecessor, InstructGPT, ChatGPT has “safety mitigations” designed to flag any deliberate misinformation and stop any attempts at harmful behaviour.

But what about cheating and plagiarism in academic contexts? It would be easy to instruct the bot to write anything from homework assignments to admissions essays for university. And worse, it is practically impossible to trace such abuse of the bot.

Understandably this has worried schools and educators, who might now see themselves in an arms race with students to prevent such use of the bot. In a recent interview, the OpenAI CEO Sam Altman said that his company would work to develop tools to try and detect plagiarism. But he also argued that in the past, educators had also adapted to technologies, such as laptops and calculators, which had threatened the status quo of education, and that they might have to do so again.

Can you make mistakes?

At the end of the day, seeing a bot do such “advanced” things, and being able to experiment with it yourself is a fascinating exercise, and maybe helps assuage any fears that we are on the verge of being replaced. ChatGPT is still far from perfect – it repeats itself a lot and sometimes follows instructions too literally – but it might be an important stepping stone in how we continue to use AI to improve workflows and automate processes.

Sources

ChatGPT CEO Responds to Plagiarism Concerns Amid School Bans

ChatGPT: Optimizing Language Models for Dialogue

How Will ChatGPT Affect Your Job If You Work In Advertising And Marketing?